Get weekly

HubSpot updates

You've had a fantastic idea to grow your business. You know you'll need to create some new resources, build a few automations and create a go-live timeline. You're excited about your idea but daunted about the practicalities.

Will you need to purchase additional software? How will you ensure the new automations won't interfere with existing ones? What are the risks involved with the project?

As your business scales, putting new ideas into action becomes more complex. You need to win the hearts and minds of your colleagues to put new ideas into action, avoid creating redundancies between systems and ensure that your idea is practical in the longrun.

These business-critical considerations quickly mount up and become daunting.

At SpotDev, we've developed a process that reliably produces a clear report covering all of the complexities of your next project. Using well-established principles from proven project management frameworks, we have turned our Diagnostic Engagement into a process trusted by businesses throughout the world.

Use cases, user stories and acceptance criteria

The goal of a Diagnostic Engagement is to take your idea and break it down into clearly defined chunks. Each chunk is referred to as a use case and is defined in the form of a short description of why the end user might want to achieve a specific result.

This use case is then clarified with two elements adapted from standard software development practice:

- User story: a description of who will use a resource, what they would do and why they would do it

- Acceptance criteria: an outline of how the user will access the result and what the outcome would be.

When combined, these three elements show us:

- Why we are building a new resource

- Who we are building it for

- How we know we built it properly.

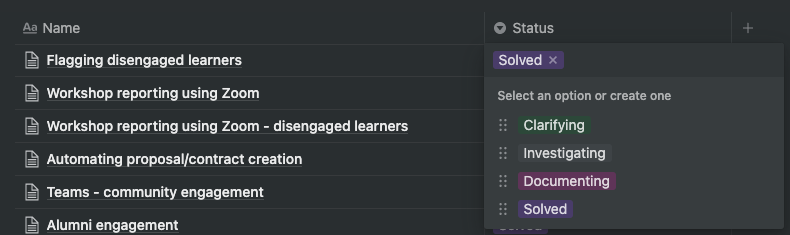

When you engage SpotDev for a Diagnostic Engagement, we will start by gathering all of your use cases and then conduct a four part process:

- Clarifying: working with you to define the user story and acceptance critiera

- Investigating: reviewing the different methods that will successfully deliver on your use case

- Documenting: selecting the best solution and documenting it in plain English and, if appropriate, a series of flowcharts

- Solved: presenting the solution to you for approval.

This blog post is about user stories and acceptance criteria, so we'll focus on the 'clarifying' stage here.

Examples of user stories

When documenting your user stories, we use an established process from agile project management processes. The format is:

- As a [user]

- I want to [goal]

- So that [why they need to achieve the goal].

Some examples of user stories would be:

As a community manager, I want to assess the level of engagement within our community portal, so that I can reduce churn and help members to get value from the portal.

As a learner in an online course, I want to instantly receive the certificate when I've completed the exam, so that I can use my new certificate when applying for jobs.

As a sales person, I want to automatically send customised proposals to prospects, so that I can send proposals out faster than our competitors.

Examples of acceptance criteria

Now that you understand who you are creating a resource for and why they would use it, we need to clearly document the definition of success. To achieve this, we borrow a feature of software development processes known as acceptance criteria.

Without clear acceptance critiera, designers and developers could create a solutuion that delivers on the user story but doesn't meet a key stakeholder's definition of success.

At SpotDev, we use a thee part model for documenting acceptance criteria:

- Given: [starting state]

- When: [trigger action]

- Then: [finishing state].

This results in a series of steps that represent the successful outcome of any deliverable related to this use case.

If our use case was to allow users to use a word processing app to make software bold, then our acceptance criteria might be:

Given: that the user has highlighted some text

When: the user clicks the 'bold' button in the toolbar

Then: the highlighted text appears in bold.

This simple set of acceptance criteria tells our designers and developers a lot about the feature, including:

- we need to use the existing 'highlight text' function

- we need to add a 'bold' button to the toolbar

- the bold button needs to trigger a function that changes the weight of just the highlighted text.

As any user of a modern word processor knows, however, the user probably expects additional methods of making text bold. We can define these with additional acceptance criteria.

For example:

Given: that the user has highligted some text

When: the user presses 'Control and B' at the same time

Then: the highlighted text appears in bold.

Given: that the user has stopped typing

When: the user presses the 'bold' button in the toolbar

Then: all future text the user types is in bold.

Given: that the user is typing bold text and stops typing

When: the user presses the 'bold' button

Then: all future text the user types is not in bold.

Taking a short amount of time to document the acceptance criteria in a clear manner avoids a time-consuming and expensive re-writing of code later. Everyone involved in the project will know exactly what is planned, how it works and what the outcome will be.

The impact of user stories and acceptance critiera

We introduced user stories and acceptance criteria at SpotDev to align with two of our values:

- Dependability: we do what we say we'll do, when we say we'll do it

- Accuracy: we obsess over attention to detail. Our clients know that we will get it right.

Before using user stories and acceptance critieria we found that we struggled to live up to our own standards with accuracy. While we delivered high-quality resources that were thoroughly QA-tested, they didn't always meet with our clients' expectations of how they should behave.

We worked incredibly hard to resolve this with increasingly demanding QA processes and higher expectations of our designers and developers.

And then it hit us...

The problem wasn't in the production part of our process, it was in the requirement gathering stage.

Once we introduced user stories and acceptance criteria as a compulsory part of our requirement gathering process, our clients were dramatically happier with our work. The work itself wasn't any better but it was significnatly more consistent with our clients' expectations.

That's why we launched our Diagnostic Engagement service. We wanted all of our clients to benefit from this process, even if they weren't using our team for production or implementation work.

Book a call to discuss a Diagnostic Engagement

If you have a project you feel would benefit from a Diagnostic Engagement, then you should:

- Review our Diagnostic Engagement service page

- Book a call with a member of our team to discuss your project.