Get weekly

HubSpot updates

It’s one of the biggest SEO problems you can face. If your site isn’t being indexed, it won’t be appearing in the search results pages, and you won’t be getting any organic search traffic. There are many reasons why this might be, but by spotting the issues and working through them, you can resolve the issue and get your site back in the search results.

Before you work through these steps, it’s important to make sure you have Search Console available. This handy Google tool can tell you a lot about your site, and lets you spot many potential issues, as well as solve some of them.

It hasn’t yet been found by Google

One simple issue can be that the site or is yet to be found by Googlebot, or the page on your site that you are trying to get into the index is yet to be found. This might be a problem with your crawl budget being wasted on pages that you don’t need crawled.

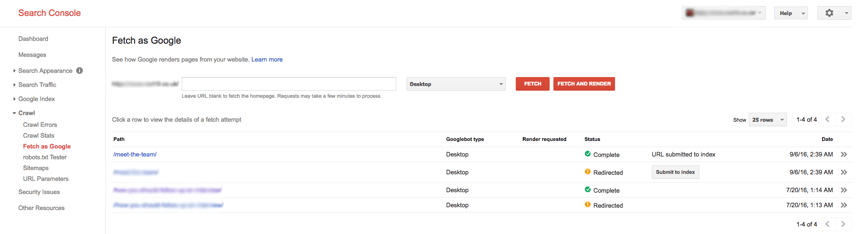

A quick solution is to use Google Search Console to fetch and index the page. In the ‘Crawl’ section of search console, you will find the ‘Fetch as Google’ option.

This allows you to test if a page can be fetched by Google, and once tested, it can be added to the index right away. You’ll be given the choice between only submitting that URL, or crawling all URL’s linked from it, so choose whichever option best suits you.

Noindex

It may be that your site has been set to noindex. This might be because it was being worked on, or the noindex tag was carried over from the development site. If you have a meta noindex tag on the pages you can’t find in search, removing them will allow them to be crawled.

![]()

No sitemap

Having no sitemap makes it that much harder for search engine spiders to navigate your site. It may also be the issue that search engine crawlers cannot locate the sitemap on your site. Make sure that it is in a format crawlers can understand, and include it in your robots.txt to ensure they can find it.

Once you have created a sitemap, or if you think Googlebot has had trouble finding it, you can submit it in search console, where you can test and add your sitemap in the ‘sitemaps’ section of the ‘crawl’ area.

Blocked by .htaccess

The .htaccess file can be used for a multitude of things, but it may be a reason that crawlers have been blocked. You will need to check the .htaccess file to see if the Googlebot user agent has been blocked, as this would keep it from being able to crawl the site.

Manual penalty

Your site might have been hit with a manual penalty. To check this, head to the ‘manual actions’ section of the search traffic tab, where you will be able to see if any manual webspam actions have been taken against your site. For a list of all the possibilities, and how Google recommend you solve them, check the help section here.

If they have, you’ll need to make it a priority to get them fixed, as your site won’t be allowed back into the Google index until the issues are resolved, and the site has been manually inspected. For a guide to doing this, and to see some examples of what the process might look like, check out this blog from Kissmetrics on the subject.

Crawlers are having issues accessing your site.

There are a number of reasons why crawlers might be having trouble accessing your site. robots.txt issues are one cause, but other issues can also prevent crawlers from finding all the content on your site. You can use ‘crawl errors’ tab in ‘crawl’, which will show you a list of errors on your site. Of particular interest would be any server errors, which would suggest that the crawler was unable to access your site as it was down.

If you’re feeling particularly brave, you can consult the log files of your website. You can use a tool such as the Screaming Frog Log File Analyser, or do the analysis manually. Builtvisible have produced an excellent guide to log file analysis, which you can read here.

Basically, log file analysis can help to spot issues crawlers are having in getting around on your site. It may be that your site is too large, and crawlers are having trouble getting to the content you want them to index, or that long page load times are cutting down on the amount of time that Google spends crawling your site.

By working through these common issues, you should be able to identify the issues your website is facing, and get your website crawled and back into the index. Remember that you can use the ‘Fetch as Google’ tool in search console to quickly have your pages submitted to the index to get them back online faster.